Estimating functions of multiple parameters

2019-03-10

I’m just back from Boston, where I presented some recent work1 at the American Physical Society’s March Meeting. I’m still finishing the paper, so in the meantime for those interested I’m going to expand on some of the main points in the talk here on my blog.

Unattainable bounds

I warned in my talk that treating the problem of estimating a function of multiple parameters as a single-parameter estimation problem can lead you astray. You’ll generally calculate unattainable bounds and introduce unnecessary errors in your estimates. The unattainable bounds are discussed in a specific case in the paper2 that inspired this work, which I collaborated on with with Zachary Eldredge, Michael Foss-Feig, Steve Rolston, and Alexey Gorshkov at the University of Maryland. Section III.B. of that paper goes into some detail about why naïvely calculated single-parameter bounds are generally unattainable for the sensor networks we considered. Here I present another illustration of the problem, because it never hurts to see something explained multiple ways!

A classical problem is simplest to get the point across. Imagine a two-dimensional family of Gaussian distributions parametrized by their mean and with constant covariance

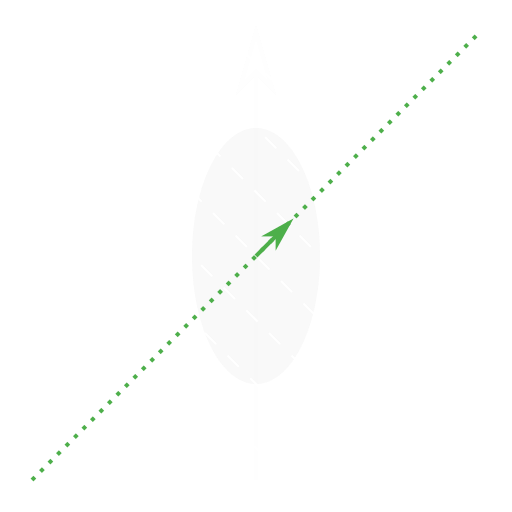

Given the ability to sample from a distribution from this family, how precisely can we estimate the sum ? The picture below illustrates this problem.

If we treat this like a single-parameter problem, we’ll calculate the Fisher information for the sum using the partial derivative (since adding a half unit each of and is the most direct

way to increase by one unit):

This suggests that fluctuations in ought to be . This agrees with the picture, where the shaded covariance ellipse doesn’t quite advance to the level surface along the greed dotted line depicting movement along .

We know something about how our estimates ought to be constructed here, though. We should sample many times and take the sum of the average of and the average of . This distribution of estimates we will get with this procedure is the original distribution marginalized over lines of constant , just with variance inversely proportional to the number of samples drawn. Calculate this marginal covariance by reparametrizing the distribution with

This tells us that fluctuations in are actually , showing the calculation from the single-parameter approach is in error.

As I mentioned in my talk, this is because the single-parameter problem assumes additional parameters are fixed to known values. As the picture makes clear, if we actually knew , then the conditional distribution for our estimates of would have fluctuations smaller than 2, but since we have uncertainty about , we need to consider the marginal distribution, which has fluctuations greater than 2 (depicted as the covariance ellipse extending beyond the level surface).

I’m planning a follow-up post on the form of the optimal measurement strategy for the example given in my talk, but I’m also open to addressing other specific questions people raise.

One from many: Scalar estimation in a multiparameter context, 2019 APS March Meeting, Boston, MA, March 7, 2019 (abstract: K28.00012, slides)↩︎

Optimal and secure measurement protocols for quantum sensor networks

Zachary Eldredge, Michael Foss-Feig, JAG, S. L. Rolston, and Alexey V. Gorshkov

Phys. Rev. A 97, 042337 (2018), DOI:10.1103/PhysRevA.97.042337, arXiv:1607.04646↩︎