Gambler's Ruin

2013-10-17

Over the summer, I took the opportunity to start watching through the lectures on the Perimeter Institute website on Quantum Foundations. During one of the lectures on probability, the following scenario was put forth:

Original Problem

Suppose I offer you the opportunity to place a bet on a toss of my coin. Placing a bet involves splitting your money between heads and tails. After the toss, the money you bet on the side that came up is doubled and returned to you (I get to keep the money you bet on the side that did not come up). You also know that my coin is more likely to come up heads than tails. Specifically, the probability that heads will come up lies in the open interval . What strategy should you use to place your bet?

Naïve Approach

The naïve strategy is to try and maximize the amount of money you make on average. To simplify calculations, let us assume your initial money is , and let us denote the fraction of money you bet on heads by . The money you make on average after one toss can be calculated as shown below:

Since means is positive, this is maximized when —i.e. when you bet all your money on heads. Using this strategy, on average you will make . Since you make money with this strategy on average, you will make even more money on average if you continue to place bets. After placing bets, the average money you will have is . This seems good, until you consider that with this strategy you will lose all of your money if only a single tails comes up. How likely is this to happen? The probability of not getting all heads for tosses is , which very quickly approaches as you begin placing more bets. If you place bets ad infinitum, as this strategy tells you to do, you will certainly lose all of your money (hence the name, Gambler’s ruin).

Modified Approach

The question I wanted to answer was: is there a strategy that can take advantage of the bias of the coin without leading to ruin? To avoid the pitfall of the previous strategy, I wanted to find strategies that gave you a better chance of making money than losing money. Specifically, which values of give you a higher probability of making money than losing money as you place more and more bets?

My gut feeling was that there was a transition fraction such that when betting many times if you would most likely make money, whereas if you would most likely lose money.

Numerical Investigation

Before embarking on an analytic pursuit of this transition, I decided to run a quick simulation of the scenario in python. When considering only a finite number of throws, the probability of making money can be calculated exactly. This is done by considering all sequences of coin tosses and summing the probabilities of those that result in you making money. To do that, we need to calculate the amount of money you have given a sequence of coin tosses. Suppose after a partial sequence of tosses the amount of money you have is . How much money would you have after the next toss if it comes up heads? We double the fraction of money you bet on heads, so . Similarly, if a tails had come up instead you would have . These examples make it clear that each coin toss has the result of multiplying your previous amount of money by a number depending on the outcome. Since it doesn’t matter what order we multiply these numbers together in, we can see that it doesn’t matter what order the outcomes are arranged in. All that is important is how many times either heads or tails came up. From this, and the fact that we are defining our starting money , we can calculate the money you have after tosses:

Here stands for the number of times heads came up (the favorable

outcomes, if you will). For the purposes of our simulation, we also need to know the number of individual sequences where heads comes up times. This is given by the combinatorial expression . The responsibility of our python program is now to calculate which values of result in for a given , multiply the probability of getting a sequence with heads by the number of different sequences with heads, and sum all these probabilities together. We can calculate the probability of making money as:

My first program was a little clumsy in that it checked all values from to for , when in reality once the minimum is found all higher values of will give (getting more heads is not going to decrease your return). The following python code uses scipy to calculate the probabilities and matplotlib to display the results:

from matplotlib import pyplot as plt

from scipy.special import binom

p = 0.8 # Probability of getting heads

N = 1000 # Number of coin flips

y_samples = 1000

# Array of fractions of money to bet on heads between 1/2 and 1

y_vals = [1/2 + x/(2*y_samples) for x in range(1, y_samples)]

# Array of minimum numbers of heads required to make money

min_f_vals = []

# Find the minimum value of f for all betting fractions y

for y in y_vals:

# Set f to the max value by default (guaranteed to be profitable)

f_min = N

for n in range(N + 1):

if y**n*(1 - y)**(N - n) > 1/2**N:

f_min = n

break

min_f_vals.append(f_min)

# For each y sum the probabilities of all f that result in gain times the

# number of sequences that give each desired f

gain_probabilities = [sum([p**f*(1 - p)**(N - f)*binom(N, f) for f in

range(f_min, N + 1)]) for f_min in min_f_vals]

fig = plt.figure()

ax = fig.add_subplot(111)

ax.plot(y_vals, gain_probabilities)

ax.plot([1/2, 1 - 1/y_samples], [1/2, 1/2], linestyle='--')

plt.xlabel(r'$y$')

plt.ylabel(r'$P_\mathrm{gain}$')

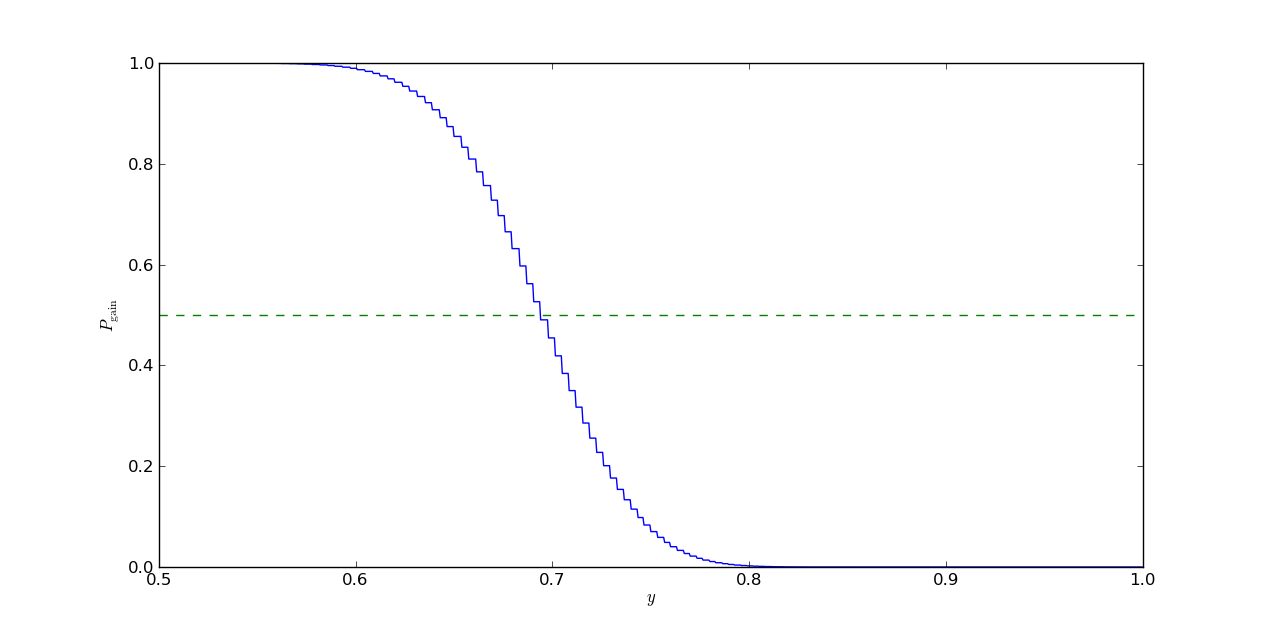

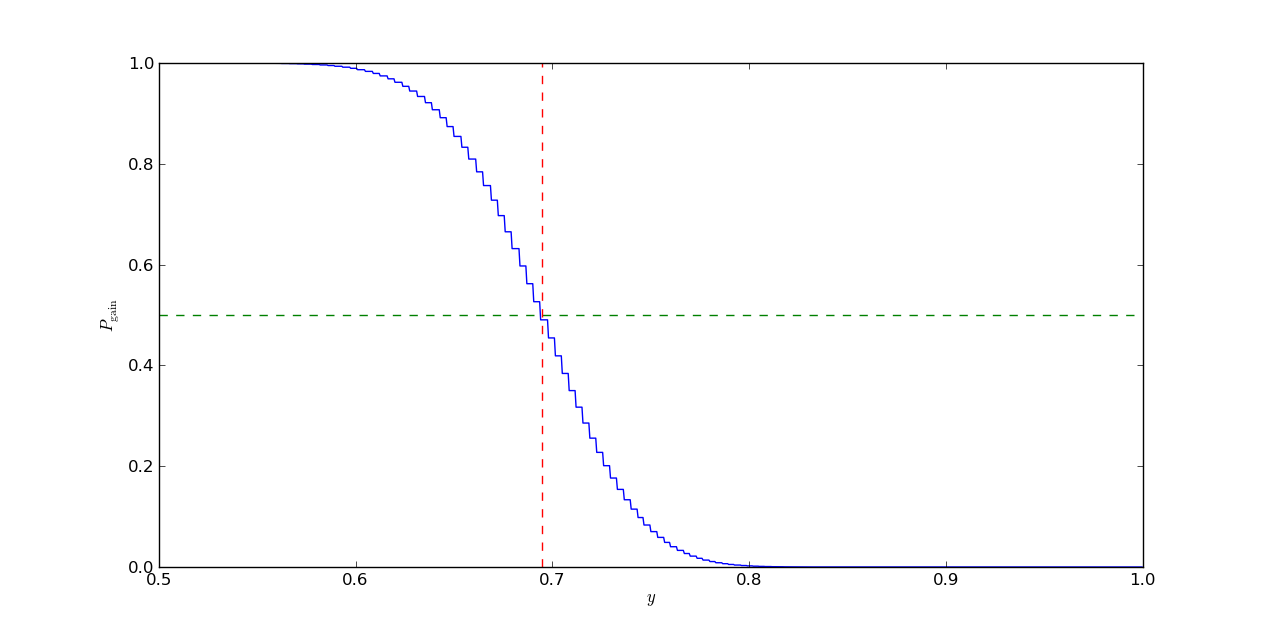

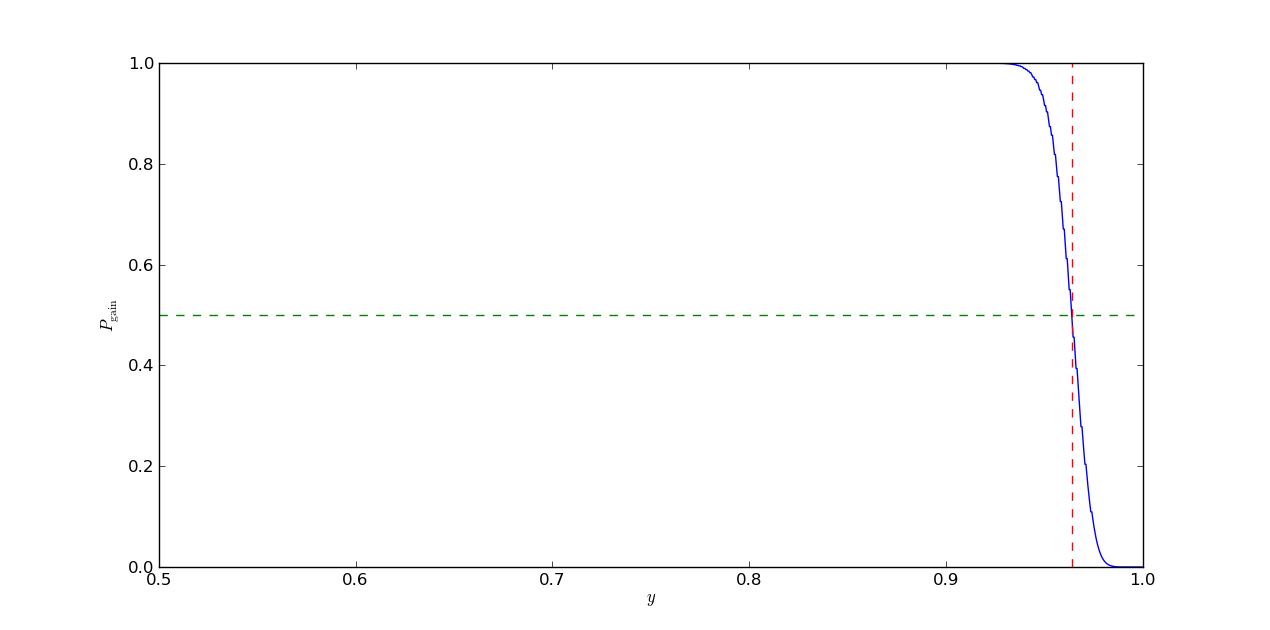

plt.show()Running the code results in output like below:

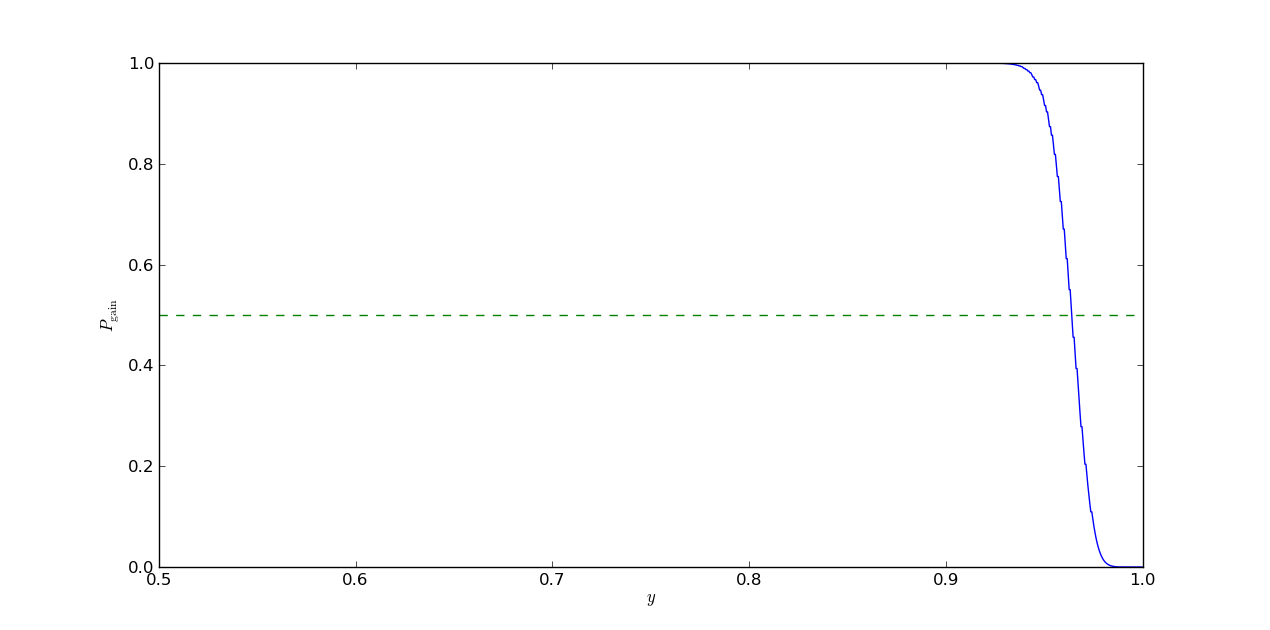

The value of for which crosses the mark should approach the value of as gets larger and larger. Playing around with various values for , we see the crossing point becomes relatively constant as gets larger (the code I presented doesn’t do well for values of much higher than , likely because the exponentiation and binomial coefficients blow up). We also see the threshold is a function of , as expected.

Analytic Solution

Computing the analytic solution involves taking the limit of infinite coin tosses to find which values of satisfy the following inequality:

In this scenario we can employ the typical sequences theorem, which tells us that in this limit the probability that our sequence is in the typical set (where ) is equal to 1. Therefore, if sequences in the typical set have a gain greater than we will with certainty make money, and if they have a gain less than we will with certainty lose money. The value of for which the gain is equal to is the value at which we transition from making to losing money, and is what we are interested in calculating as :

If we take the logarithm of both sides and do a little rearranging, we find this expression equivalent to:

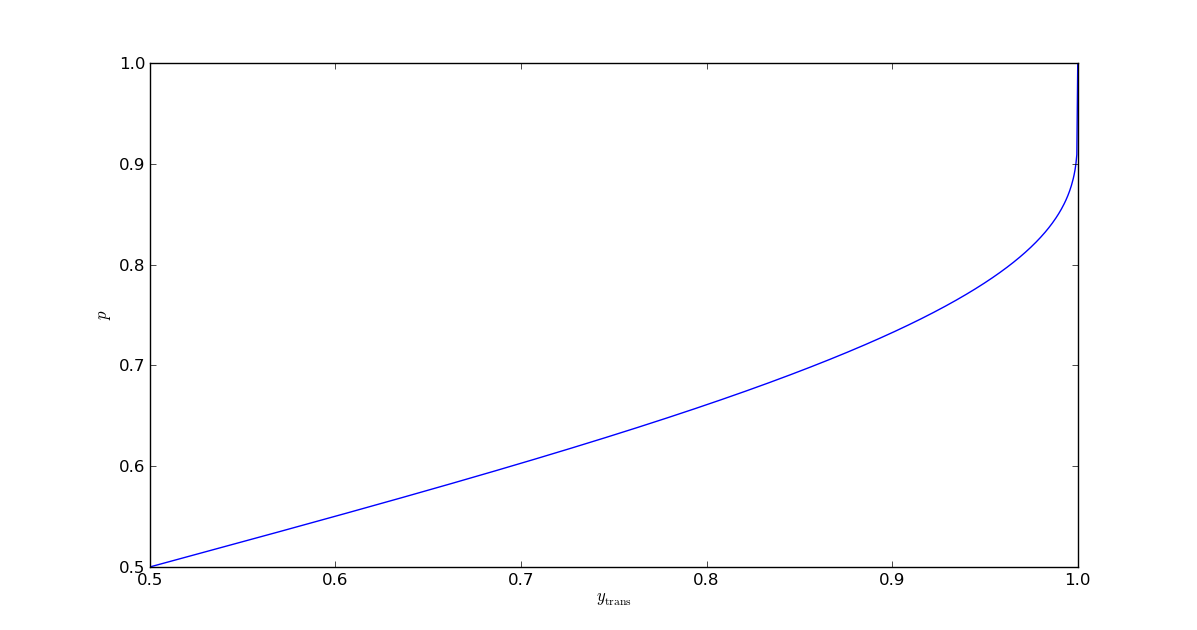

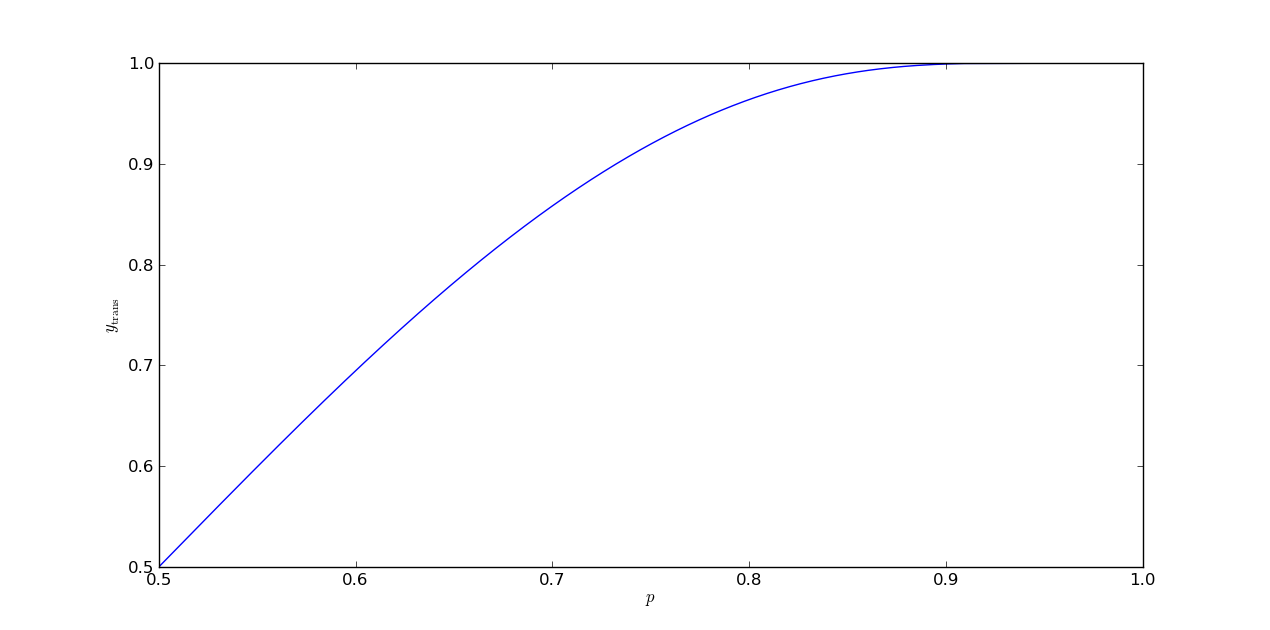

Unfortunately there is not a clean way to invert this function analytically. (If anyone knows how to represent using well known special functions, I would be very interested to learn.) Fortunately for us, it is one-to-one on the open interval , so the inverse of the function exists and can be calculated numerically. With this inverse in hand, we can write:

We have now solved our problem. Below are some plots showing the function and overlays of the asymptotic on top of the gain probabilities as a function of for various finite values of .

The values for were calculated using a numerical zero finder from scipy.optimize:

y_trans = scipy.optimize.brentq(lambda y: (log(2) + log(1 - y))/(log(1 - y) -

log(y)) - p, 1/2 + 1e-6, 1 - 1e-6)As of publication, I don’t know of any other works that solve this specific problem, but I haven’t done a particularly exhaustive search either. If you know of one, please bring it to my attention and I will gladly reference it here.